Introduction

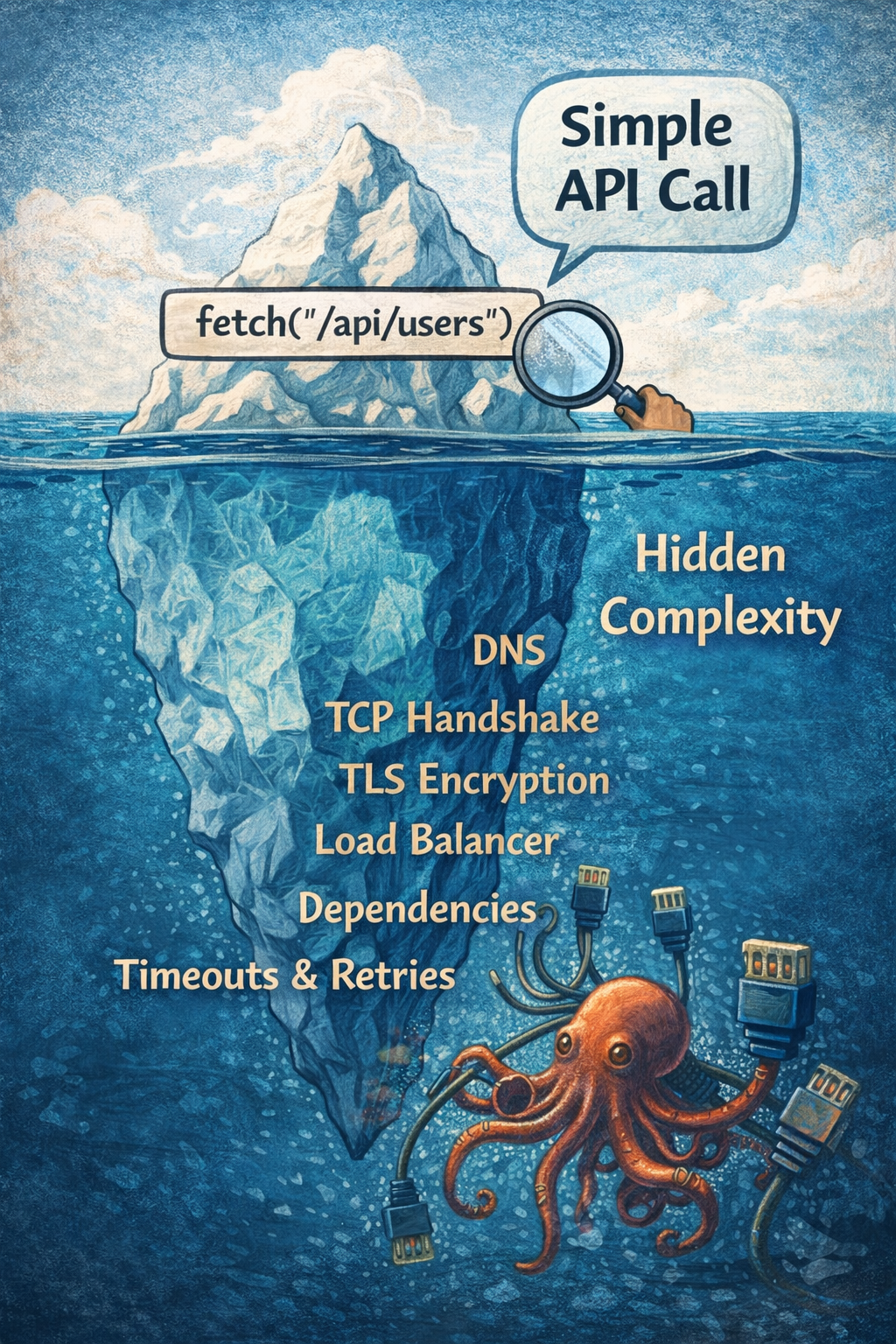

You write this:

fetch("/api/users")And magically, a list of users appears.

No sockets. No packets. No cables under the ocean. Just clean JavaScript and a promise that resolves.

Modern APIs are incredibly good at hiding complexity. So good that it’s easy to believe an API call is basically a function call over the network.

It’s not.

That single line kicks off a chain of events spanning your browser, operating system, routers, load balancers, kernels, application runtimes, databases, and back again all while trying (and sometimes failing) to be fast, reliable, and secure.

So what actually happens between the client and the server?

Let's peel the stack. One layer at a time.

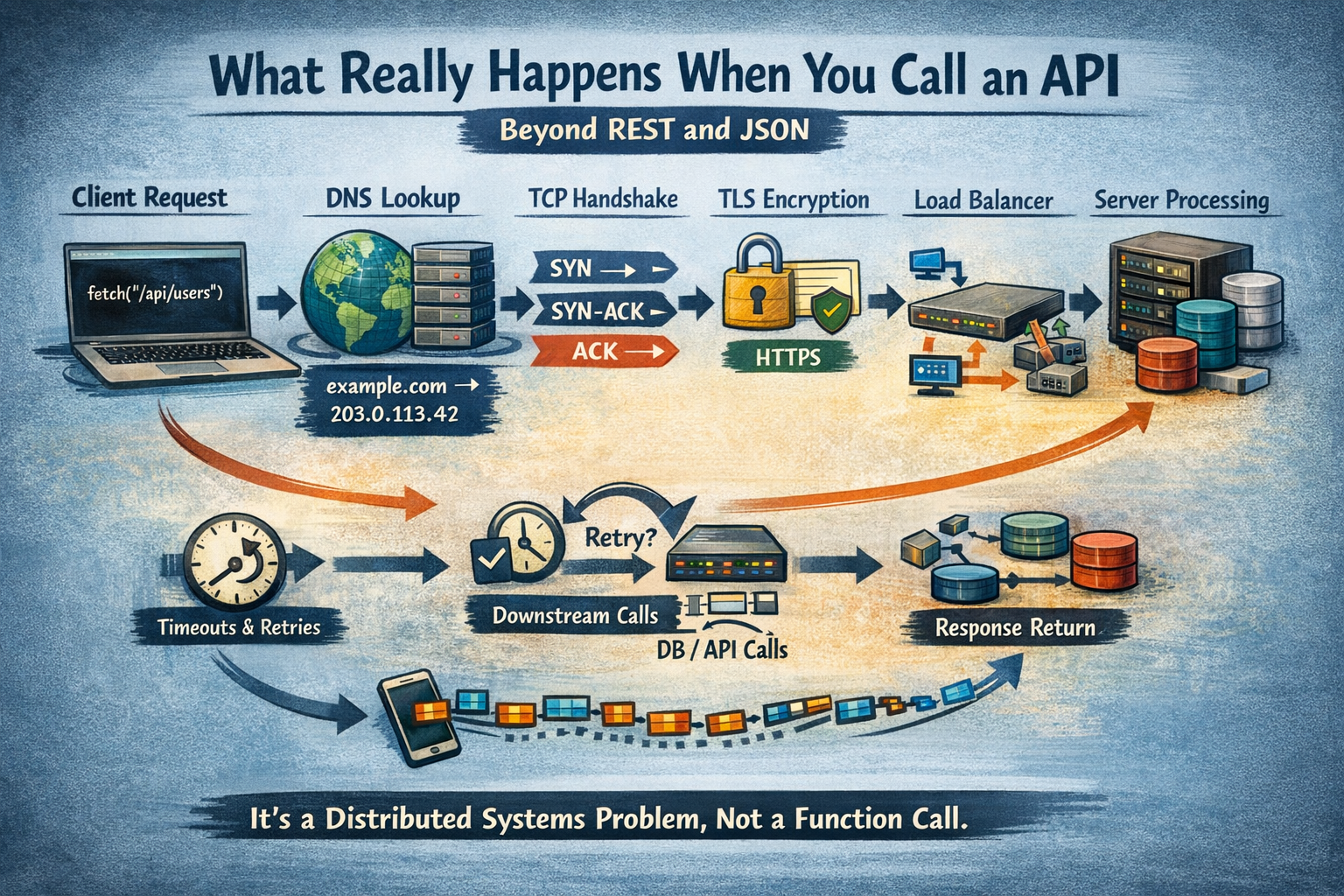

1. DNS: Finding the Server

First problem:

/api/usersmeans nothing to the network.

Networks don’t understand domain names. They understand IP addresses.

DNS is the phonebook of the internet. You ask:

“What’s the IP address for this domain?”

And DNS replies:

Try 203.0.113.42.But this lookup is rarely a single step.

There’s caching everywhere:

- The browser might already know

- The OS might have it cached

- Your router might remember

- Your ISP’s resolver might answer

- Or the query walks the DNS hierarchy

When DNS works, it’s invisible.

When it doesn’t, everything breaks.

No DNS → no IP → no connection → no API.

This is why DNS issues feel like “the internet is down.”

2. Establishing a Connection: TCP

Now that the client knows where to send the request, it needs a connection.

This is where TCP comes in.

TCP provides:

- Reliable delivery

- Ordered packets

- Congestion control

Before any data flows, TCP performs a handshake:

- Client: “Can I talk to you?”

- Server: “Yes, I’m listening.”

- Client: “Great, let’s talk.”

That handshake costs time. Real network round trips.

This is why:

- Cold connections are slower

- Connection pooling matters

- Keep-alive is not an optimization; it’s survival

Every new connection is a latency tax.

3. Security Layer: TLS

If this is HTTPS (it is), now comes TLS.

TLS exists because sending data in plain text over the internet is… optimistic.

Anyone on the path could read or modify it.

TLS provides:

- Encryption

- Authentication

- Integrity

Certificates are identity cards for servers.

Browsers trust certain authorities, and those authorities vouch for certificates.

The TLS handshake:

- Verifies the certificate

- Negotiates encryption keys

- Establishes a secure channel

All of this happens before your API request is even sent.

Security adds latency.

Skipping it adds disasters.

4. The Request Enters the Cloud

Your request is now encrypted and on its way.

It doesn’t hit your server directly.

It hits a load balancer.

Load balancers decide:

- Is this backend healthy?

- Which instance should receive traffic?

- Should this connection be reused?

Some operate at:

- Layer 4 (TCP-level routing)

- Layer 7 (HTTP-aware routing)

This is why:

- Requests don’t always hit the same server

- Servers can be replaced without downtime

- Your app survives individual node failures

5. Inside the Server Process

Eventually, the request lands on a machine.

Now the operating system takes over.

- Packets arrive at the kernel

- TCP reassembles them

- Socket buffers fill

- Your application runtime is notified

How the request is handled depends on the model:

- Thread per request

- Event loop

- Async IO

- Worker pools

Frameworks hide this complexity, but it still exists.

Threads can block.

Event loops can stall.

Context switches cost time.

Latency often hides in queues you never see.

6. Downstream Dependencies

Your API handler rarely does just one thing.

It might:

- Query a database

- Read from a cache

- Call another service

- Publish an event

Each of those is another network hop.

Which means:

- More latency

- More failure points

- More retries

This is how simple systems slowly turn into distributed systems.

And distributed systems fail in creative ways.

7. Timeouts, Retries, and Partial Failures

Failures in production are rarely binary.

Things don’t fail fast.

They fail slowly.

That’s why timeouts matter more than success paths.

Retries sound harmless: “Just try again.”

But retries:

- Multiply load

- Increase tail latency

- Can trigger retry storms

Idempotency becomes critical.

Sometimes the correct action is to fail fast and stop retrying.

8. The Response Journey Back

Eventually, your application returns a response.

That response:

- Is serialized

- Written to a socket

- Split into packets

- Encrypted

- Sent back across the network

On the client:

- Packets are reassembled

- Decrypted

- Parsed

- Converted into objects

- Finally delivered to your code

When users say an API “feels slow,” they’re feeling variance across all these layers.

9. Why APIs Fail in Production

Most outages aren’t caused by one thing.

Common causes:

- Latency spikes

- Connection pool exhaustion

- DNS resolution failures

- Misconfigured load balancers

- Cascading retries

Failures rarely stay isolated.

They spread.

10. Why This Mental Model Matters

With this mental model, you:

- Design better APIs

- Pick sane timeouts

- Debug faster

- Avoid accidental complexity

- Communicate better with infra teams

You stop asking: “Why is the API slow?”

And start asking: “Where is the latency accumulating?”

That shift changes everything.

Conclusion

An API call is not just JSON over HTTP.

It’s name resolution, connection negotiation, encryption, routing, scheduling, IO, dependency coordination, and failure handling; all hidden behind a function call.

Once you see the layers, you can’t unsee them.

An API call is a distributed systems problem pretending to be a function call.